What is Elasticity?

Quick Definition: Elasticity in cloud computing refers to the dynamic scaling up and down of resources and architecture in response to changing demands, which provides stability and maximizes cost efficiency.

Building on the cloud has many advantages over managing racks of physical servers. The management of logistics like power and cooling are handed off to a third party. New servers and other resources are available at the click of a mouse. Your infrastructure can scale up to meet demand as needed, then shrink down to avoid over-provisioning.

That final point is our topic for today, a concept known as elasticity. Like a rubber band, your infrastructure can stretch to several times larger, and then return right back to its original size. This concept leverages the power and magic of cloud infrastructure and is driven by automation. Today we'll dig into elasticity further, its components, benefits, challenges, and what the future might hold in trends around this cloud superpower.

What is Elasticity?

Elasticity is the ability of a cloud computing system to dynamically, quickly, and automatically adjust resource allocation. Specific metrics are tied to certain actions within the environment, allowing the system to make automatic adjustments. Unlike traditional scaling approaches in data centers, elasticity in the cloud emphasizes automation and speed, keeping users happy without requiring manual intervention by admins.

Those last two points are a huge differentiator between elasticity and another cloud concept called scalability. Automation is the secret sauce behind elasticity, with resources increasing and decreasing automatically as needed, whereas scalability is more about having the choice to deploy as many servers as you need at the beginning of a project.

Scalability is still a huge advantage of cloud computing, because fleets of servers and storage can be initially provisioned within minutes, whereas standing up similar hardware in a data center could take weeks or months.

The on-demand increase of resources is what makes elasticity so transformative. Instead of making educated guesses about how many servers to provision, you start with a base number and let the system add more, adjusting to demand. There's no underestimating resources and leaving your users to suffer from overloaded servers.

Key Components of Elasticity

It's no secret where the magic of elasticity comes from: automation. The automatic scaling of resources is the key to an elastic infrastructure. This automation can be triggered by any of a number of metrics, like active users, CPU usage, memory usage, or network bandwidth. These then fire off one of two actions which are the other two key components of elasticity: the provisioning or de-provisioning of resources.

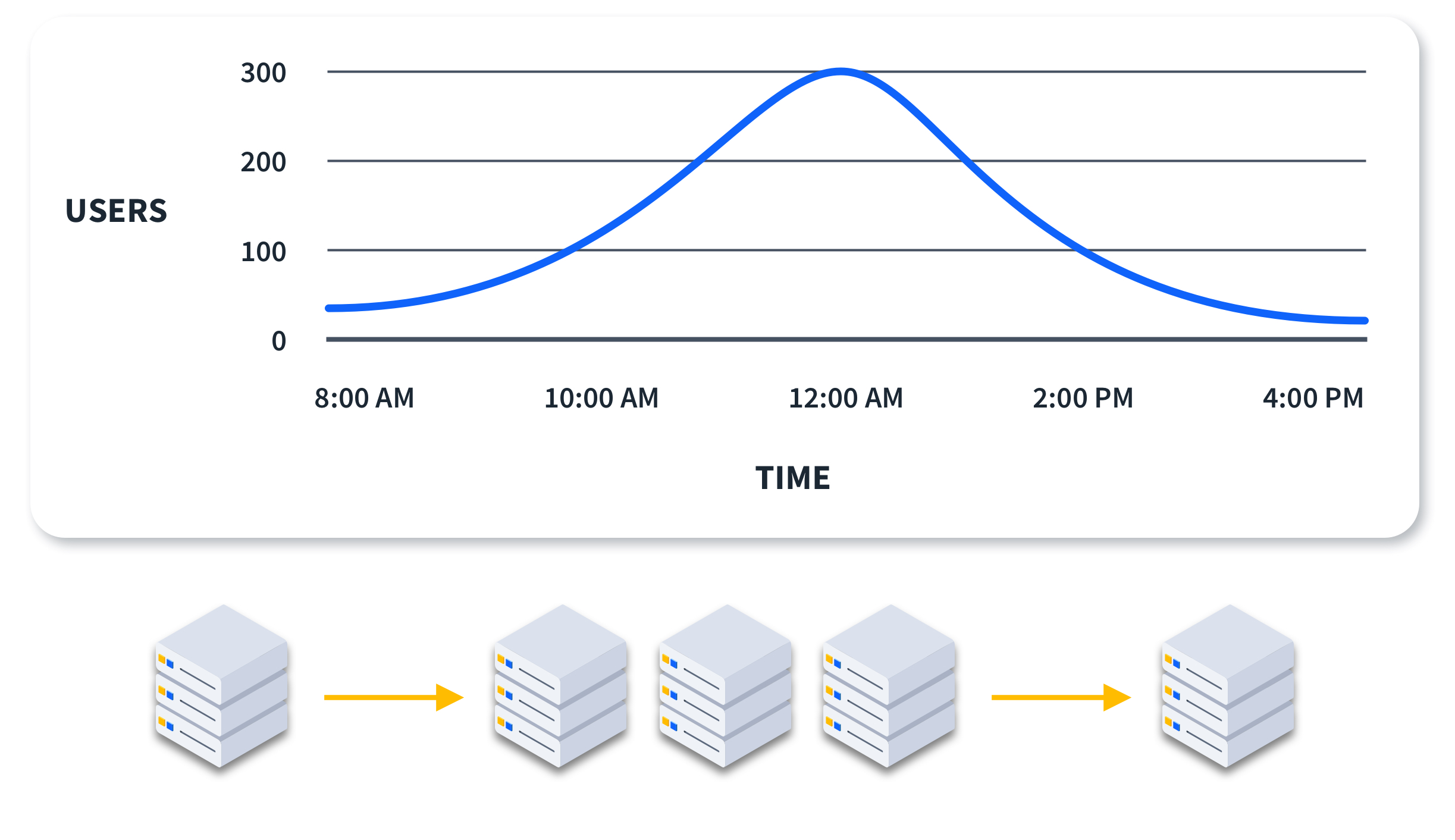

For example, say you have a site running in AWS; a single EC2 instance sitting behind a load balancer. The site is stable with one server at up to 100 concurrent users, but if users exceed that, performance starts to suffer.

AWS has a server called AWS Auto Scaling, which monitors those key metrics and can respond. In this case, you can set Auto Scaling to watch for the number of concurrent users, and when it breaks 100, spin up a second EC2 instance, then a third at 200 users, and so on. All these instances would be added to the load balancer, keeping traffic flowing to all the live instances.

This is great until the user count falls as fewer people use your site. Say after a spike to 250, it drops back to 50. Three hosts are now overkill and are running up your AWS bill. Auto Scaling is smart in this case as well, terminating unnecessary instances to reprovision capacity and avoid overspending.

All of this happens automatically, with no manual work for admins replying to alerts about performance. If your metrics are tuned well, you can anticipate loads so that new resources are up before users even notice.

Benefits of Elasticity

Elasticity can be a huge benefit in three key areas, the first of which is cost efficiency. While a service like Auto Scaling can provision resources to meet demand, it can also de-provision resources as demand drops. This can save a huge amount of money as it prevents overprovisioning of resources that would be idle or underutilized.

The second benefit is improved performance. As we've seen, auto-provisioning adjusts resources to match workload increases. This can dramatically increase responsiveness and reliability.

This increased performance leads to the third and most important benefit: enhanced user experience. This naturally dovetails into increased performance as responsive applications make for happy users, no matter how many are hitting your site at one time.

Challenges and Best Practices in Implementing Elasticity

While an elastic infrastructure sounds like magic, it does come with challenges. The first is setting up accurate monitoring of server metrics. If metrics trigger auto-provisioning, then those actions can only happen if they have good data.

The next challenge is considering your app and how it responds to load. How many users are too many for one server? In a multi-server architecture, which server bears the brunt of the load? You must answer these questions; triggering elastic provisioning based on the wrong metrics can be a useless exercise.

These challenges can be met by implementing a few best practices.

Configuring and maintaining monitoring tools takes time and cost, but ideally, you already have it in place.

A careful analysis of historical metrics data can be very helpful in planning, along with some trial and error to choose the correct metrics.

Finally, limiting the number of resources that can be provisioned is key; otherwise, unexpected metrics can spawn dozens of expensive, unneeded resources.

Use Cases for Elasticity

Now that you understand what elasticity is and its benefits, let's explore specific use cases.

Web Apps

The most obvious use case is web apps. Using elasticity, these can dynamically scale as loads increase to smoothly handle traffic spikes, whether it's your frontend or backend that's taking a hit.

Specifically, elasticity is great for web app APIs. In the case of increased traffic, larger calls pulling lots of data, or any other performance bottleneck, automatically scaling up the backend API servers to handle the traffic will keep your API operational.

Data Processing

Another less obvious use is data processing. Jobs that require heavy CPU power can benefit from infrastructure scaling on demand to complete them quicker. Care must be taken that the application doing the work can split jobs across multiple servers to take advantage of added resources.

DevOps and CI/CD Tooling

One more use case is with DevOps and CI/CD tooling. As these tools perform builds and deployments, extra resources are required to finish larger jobs and automated QA testing within reasonable time frames. Deprovisioning these environments is also very cost-effective as they would otherwise sit idle outside of active builds and testing.

Future Trends in Elasticity

Since AI is only growing, it is a perfect companion to elasticity. Instead of manually tuning metrics and triggers for auto-provisioning, just let the bots handle it! Between monitoring metrics, real-time performance, and analyzing trends, AI can make even better decisions about handling loads.

Another area where elastic infrastructure has a bright future is with microservices. Orchestrators like Kubernetes are built with dynamic infrastructure in mind, with both pod autoscaling and node autoscaling built in.

The pod version watches pods for resource spikes and acts out a defined policy to increase pod replicas, handling the fluctuating load. Node autoscaling does the same, but for adding nodes to the cluster when the existing number of nodes is too small to handle increasing pods. For an infrastructure built around Kubernetes, these are invaluable tools to automate the handling of variable loads.

To conclude today, elasticity is definitely an infrastructure superpower! Dynamically and automatically allocating resources to keep your sites running seamlessly, no matter the load, can be really transformative for your operations team and users. It's truly using the potential of the cloud to a greater extent and is crucial in staying competitive and meeting your users' demands.

Want to learn more about cloud computing? Check out the CBT Nuggets course Intro to Cloud Computing.

delivered to your inbox.

By submitting this form you agree to receive marketing emails from CBT Nuggets and that you have read, understood and are able to consent to our privacy policy.