What is Scalability?

Quick Definition: Scalability refers to cloud hosting's ability to quickly increase available resources to support static, persistent demand.

Scalability is a fundamental property that sets cloud computing apart from physical infrastructure. In the old days of on-premises servers, adding compute or storage capacity meant evaluating growth needs, filling out requisition forms, talking to vendors to gather quotes, and then finally ordering, configuring, and racking hardware. Weeks or months later, your hardware is finally ready to handle your increased requirements.

Not so with the cloud. Scalability in the cloud means all this can be accomplished with a few clicks in minutes. Need to add more servers to a cluster? Want to increase the storage for a database? Need more RAM for a resource-hungry app? Click, click, click, done.

Understanding scalability at a deeper level is important for IT professionals when comparing cloud-based versus physical infrastructures. Let's dig some more into how the cloud completely changes this game.

What is Scalability?

Scalability answers how quickly, efficiently, and cost-effectively you can allocate more capacity to a system. Any IT system is scalable to some extent; it's just a matter of what you're willing to do and pay to make it happen.

Think of it like a highway. If a city's population increases, a two-lane highway through town might no longer be sufficient, resulting in major traffic congestion during rush hour. So, the city widens the road to four lanes. This big project involves lots of time, money, and frustration during construction, but the result is worth it as traffic flow improves.

However, there is an unfortunate side effect: during off-peak times, the road can be underutilized or almost empty overnight. This is an accepted consequence as the capacity is necessary during key times.

IT systems have similar problems: workloads (traffic) outgrow capacity (number of road lanes) and require additional capacity (more lanes). Scaling to handle the highest loads leaves servers idle at off-peak times, but again, it's an accepted consequence to handle times of higher demand.

The cloud makes scalability much easier than physical infrastructure. Greater capacity in the form of more servers, more disks, better CPUs, or additional RAM can be added in minutes with little or no downtime. This is a very powerful defining feature of the cloud: adding capacity with very little effort and no upfront expense.

Types of Scalability

Scalability can take several forms, including horizontal, containerized, and vertical scalability.

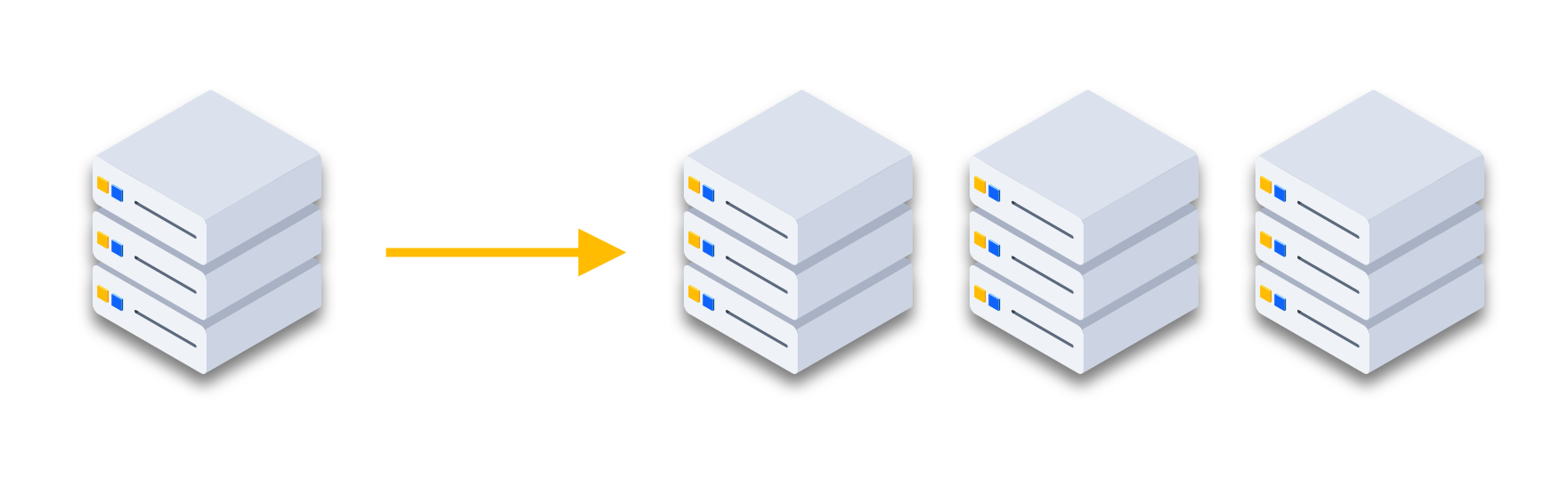

Horizontal scalability is when more instances are added to the system. The simplest form is more servers behind a load balancer, distributing traffic amongst the available servers. This also has the advantage of adding high availability, where one server failing doesn't create an outage. The advantage of cloud computing here is obvious, as capacity can be added as the popularity of a service increases.

Containerized applications are another great example of horizontal scalability. More instances of a single container (or pod, if we're talking Kubernetes specifically) can add capacity for a single microservice.

Another type of scalability is vertical scalability. For this type, the system resources of an individual system are increased. The classic example is adding RAM or disks to a single server, based on the needs of users or applications. The cloud also shines here, as adding resources to a virtual machine is usually as easy as shutting it down, changing the instance type or adding disk capacity, and starting it back up.

Benefits of Scalability

We've already covered a few of the benefits of scalability—the ability to handle more traffic, for example. Being able to scale quickly, however, has several other key benefits.

On-Demand Provisioning

The biggest single benefit of scalability in the cloud is adding resources quickly and on demand. Purchasing and configuring physical hardware or upgrade parts is time consuming, both in terms of the purchase process and in configuring the hardware. Cloud providers abstract all that work away, making scaling horizontally or vertically a much easier exercise.

Increased Adoption

With easier scaling comes a greater willingness for teams to take it on, leading to much better performance and service availability. This leads to greater deliverability for IT teams, happier users, and a healthier organization overall.

Cost Savings

Scalability can also bring cost savings. A project can be started small, with new resources added over time as it's rolled out to more users or a greater percentage of the organization. Instead of planning with the end in mind (and purchasing hardware that might sit underutilized), the needed resources are added over time as they are actually needed.

Challenges in Implementing Scalability

Scalability isn't without its challenges, though. As you select how and when to scale your system, keep these challenges in mind.

Load Balancing in Horizontal Scaling

For horizontal scaling, in particular, applications must be created with load balancing in mind. Web application frontends and backends are great examples; they can easily be coded to scale and handle requests from a load balancer because of the three-tier architecture. The databases behind the backends, however, don't scale as well, since keeping the data in sync across multiple servers is difficult. Some databases have scalability built in, but many cannot handle this.

Accurate Metrics in Scaling Decisions

Accurate metrics are another important consideration. Scaling a system should be a very data-driven decision; without seeing the data, you can't really know how stressed a system is over time or where the bottlenecks might be. Scalability should be undertaken deliberately, not just guesswork or throwing more servers at a problem.

Managing Costs in Scalable Environments

The final challenge is cost management. Yes, we just discussed cost savings in the last section as a benefit, but cloud costs can become a huge liability without careful management. It's easy to scale systems, so just scale systems whenever performance isn't great, right? Like with metrics, scalability shouldn't just throw more servers at a problem.

Scalability vs. Elasticity

We discussed elasticity in another recent article, and the two concepts might sound identical. They are, in fact, very similar, but with one key difference: elasticity is dynamic resource allocation done using automation that responds quickly to changes in workloads.

Scalability is not automatic; it is done manually and usually as a more static, long-term change. Think of scalability as responding to changes over weeks or months, whereas elasticity is responding to real-time changes over minutes or hours.

Security Considerations in Scalable Environments

Though security should always be at the forefront of any IT architecture changes or considerations, scalability is one area where you can relax a bit (assuming you did everything right to begin with). This is because actions to scale an environment usually don't introduce new risks; in fact, they should inherit any existing security measures.

Take, for example, a server behind a load balancer. Security controls on those two should always be in place (up-to-date software, only necessary ports open, etc.). Adding another server with identical configuration and software doesn't introduce any new risks because you've already addressed any existing risks.

The keys to security lie in the word "identical." Ideally, new server instances are provisioned via more configuration management and automation, like Ansible or VM images, keeping configurations exactly identical across hosts. Horizontally scaled servers shouldn't be deployed manually where steps can be missed, creating new security issues.

Best Practices for Implementing Scalability

For applications developed in-house, planning for them to run in a scalable means will set you up for success sooner rather than later. Normally this means separating out the different tiers (frontend, backend, and data) of the app into separate services so the front and backends can be easily scaled with a load balancer.

Designing applications with cloud-native principles in mind also leads to more flexible, scalable, and cost-effective apps. APIs to standardize communication and containerized services for easier scaling for great starting points to consider.

Final Thoughts

Scalability can be a powerhouse for IT infrastructure, and cloud computing makes it possible in a way that physical infrastructure just can't match. Cloud-native is the way to go for speed, ease, and cost-effectiveness.

Whether you deploy containers that can be easily scaled horizontally or virtual machine instances that can grow vertically, scalable cloud infrastructure is one mighty way that the cloud has revolutionized infrastructure.

Want to learn more about cloud computing? Check out the CBT Nuggets course Intro to Cloud Computing with Garth Schulte.

delivered to your inbox.

By submitting this form you agree to receive marketing emails from CBT Nuggets and that you have read, understood and are able to consent to our privacy policy.